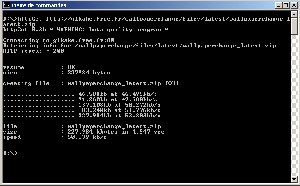

Small program for downloading any web content through HTTP connection/request.

Uses low level sockets.

This program is something equivalent to the famous wget though it is very basic and does not support FTP request. It connects to a web server and try to get info for a given url with the HEAD command. Then if everything goes ok it parse some infos about the file using http header such as the content-length, location, and accept-range. It can switch to another site if the file has moved. Then it tries to download the file with the GET command. The file is retrieved asynchronously, and it prints the current status if size is known.

I've tried it on a ShoutCast server with the new -h option and it walks so you can easily rip the mp3 stream from the net and write to a usable mp3 file ;)

Current version is 0.3e and should still be considered as beta quality even if files are retrieved correctly from all the servers I tested, as far as they exist ;)

It is mostly because I am not sure the header parsing and the body offset are done correctly, as I learnt to do that by myself, just trying and making mistakes until it walks :(

Change Log 3d~3e:

added php and cgi request type (url with a '?') so now request like "www.host.com/dir/pl.html?file=thefile" will work fine (I hope :p). I have tried to give such an url to wget but it doesn't work so I'm happy that my prog can handle that :D

Change Log 3c~3d:

added -r option for no redirection, rename -f by -h for no head info

Nate Miller's glHttp program give me this idea. His program failed to use my http server and I decided to work on my own file retrieval to fix this.

- program full source (MSVC6) and binary : httpget (30 Kbytes)

back

back